Hierarchical Stochastic Neighbor Embedding

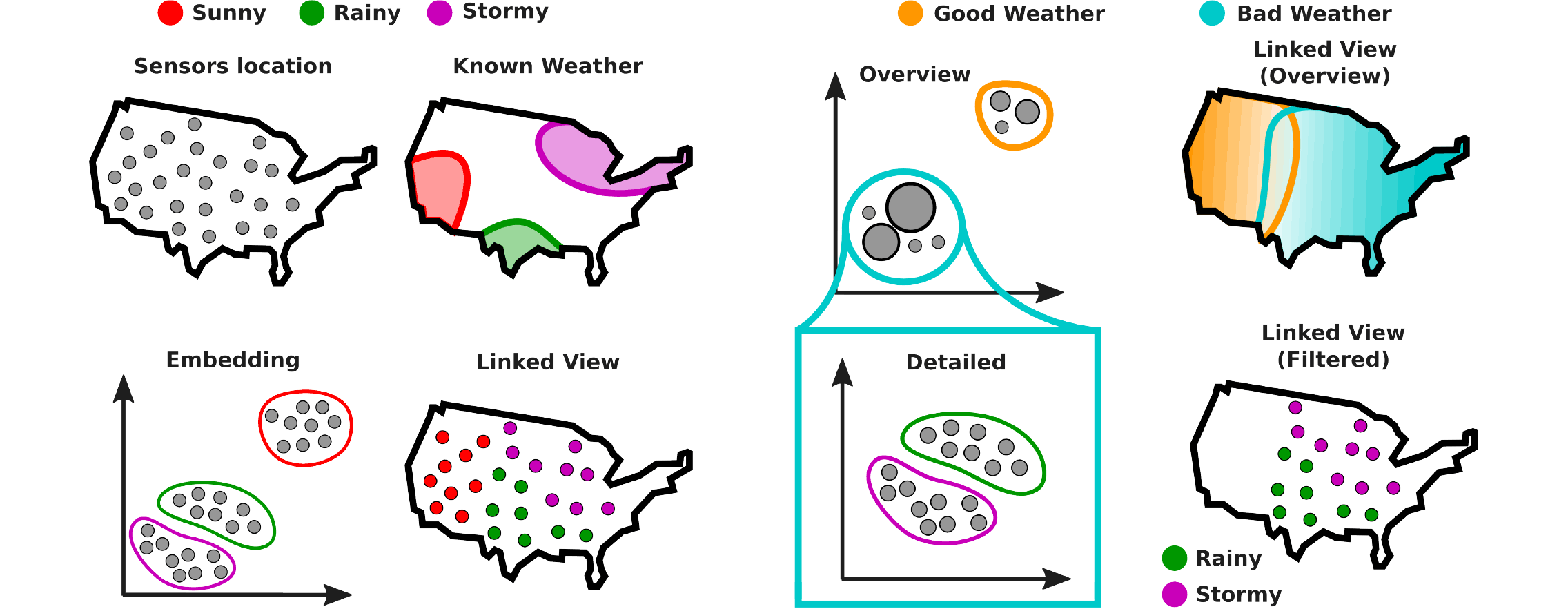

In recent years, dimensionality-reduction techniques have been developed and are widely used for hypothesis generation in Exploratory Data Analysis. However, these techniques are confronted with overcoming the trade-off between computation time and the quality of the provided dimensionality reduction. In this work, we address this limitation, by introducing Hierarchical Stochastic Neighbor Embedding (Hierarchical-SNE). Using a hierarchical representation of the data, we incorporate the well-known mantra of Overview-First, Details-On-Demand in non-linear dimensionality reduction. First, the analysis shows an embedding, that reveals only the dominant structures in the data (Overview). Then, by selecting structures that are visible in the overview, the user can filter the data and drill down in the hierarchy. While the user descends into the hierarchy, detailed visualizations of the high-dimensional structures will lead to new insights. In this paper, we explain how Hierarchical-SNE scales to the analysis of big datasets. In addition, we show its application potential in the visualization of Deep-Learning architectures and the analysis of hyperspectral images.

Resources

Citation

BibTeX

@article{ bib:2016_eurovis_hsne,

author = {Nicola Pezzotti and Thomas H{\"o}llt and Boudewijn Lelieveldt and Elmar Eisemann and Anna Vilanova},

title = { Hierarchical Stochastic Neighbor Embedding },

journal = { Computer Graphics Forum (Proceedings of EuroVis 2016) },

volume = { 35 },

number = { 3 },

pages = { 21 -- 30 },

year = { 2016 },

doi = { 10.1111/cgf.12878 },

}